In previous posts, I’ve outlined a framework for the transition to hybrid cloud and explained how NetApp Kubernetes Service (NKS) meets steps 2 and 3 of this process. In this post, we will look at NetApp Fabric Orchestrator and apply the same comparison that shows how linking our data repositories together is an essential hybrid cloud component.

Background

Before getting into details on Fabric Orchestrator, we need to take a moment and look at NetApp’s Data Fabric strategy. At Architecting IT, we’ve been talking about the Data Fabric strategy for almost four years, including multiple podcasts and blog posts.

Our first article on data mobility was posted almost exactly four years ago and referenced presentations from NetApp on the fabric concept, as well as other vendors’ products that were looking to achieve similar outcomes. This work was part of broader research looking at data mobility techniques in general. You can find links to this content throughout this post.

Data Silos

Although the initial NetApp Data Fabric thinking didn’t go into specific detail, we could see the apparent challenge that would result from having data in multiple public and private clouds. Mobility means having the capability to make data mobile – not stored in a fixed location, but available wherever it’s needed. In practice, this is a hard task to achieve (I know, I looked into forming a start-up to cover this specific challenge). Data has inertia and incurs costs to move between clouds.

Acquisitions

The process of developing solutions to move data silos to a data fabric has, in part, been achieved through multiple NetApp acquisitions. First Greenqloud in 2017, then StackPointCloud in 2018 and most recently, Talon Storage in March 2020. Each of these companies brings IP and knowledge to solving the data fabric challenge.

Fabric Orchestrator is a new solution bringing all of this knowledge together. We can see the components of Fabric Orchestrator in previous SaaS offerings from NetApp. At Insight Berlin in 2017, NetApp announced Cloud Sync and Cloud Orchestrator, the former of which was demonstrated onstage (watch from about 1h31), moving and synchronising data between an on-premises and Azure data source. (Side Note: the NetApp Cloud Portal looked pretty basic at this point. It’s interesting to see how things have changed in 2.5 years).

Cloud to Fabric Orchestration

Cloud Orchestrator was about managing the automated creation of data sources in clouds and connecting them to virtual machines and containers. Fabric Orchestrator moves a step further from Cloud Orchestrator and introduces workflow to manage this process at a higher level. We can now apply policies to data sets, independent of how they are attached to applications. Workflow enables data, for example, to be auto-tiered to less expensive storage or another cloud entirely.

5 Step Process

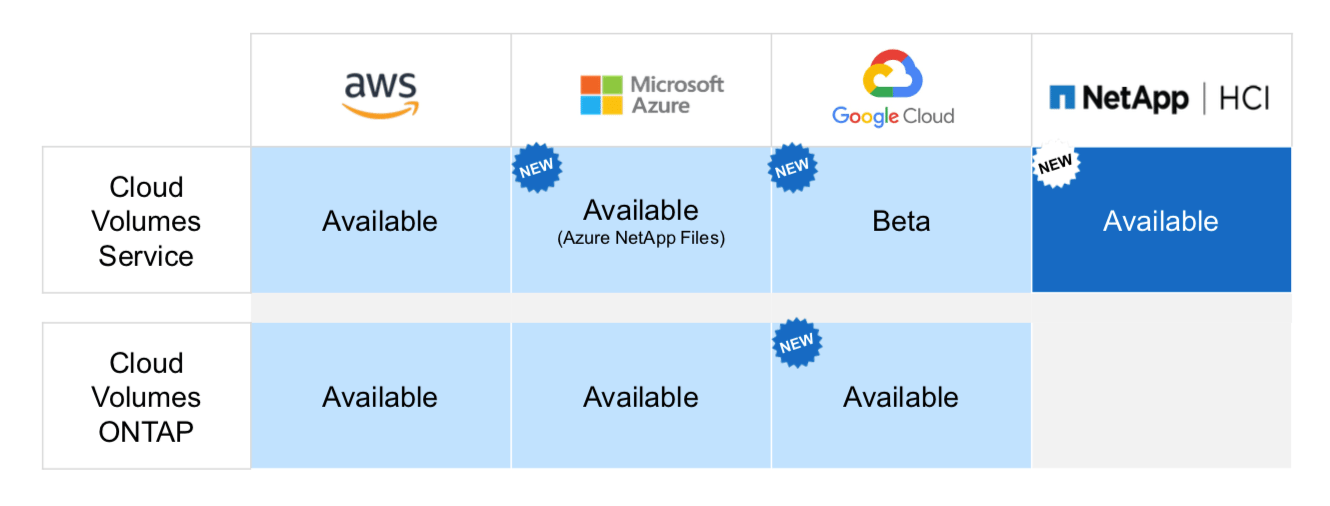

How will Fabric Orchestrator implement features of our hybrid cloud 5-step process? Step 2 talks about standardisation, and we already see that within the NetApp cloud offerings. Figure 1 shows NetApp storage services offered both in the public cloud and on-premises. One intriguing aspect of these solutions is the ability to run the Cloud Volumes Service (CVS) on-premises under HCI, rather than to deploy an ONTAP instance or having a physical appliance. A few years ago, it would be hard to imagine NetApp not pushing ONTAP as the solution for all customer requirements.

Figure 2 shows the features of Fabric Orchestrator that are available today. We can see a lot of “coming soon” features, but many components are already available. These offerings still very much align with the “standardised” step 2 of the 5-step process. For example, “Code to One Extensible Data Fabric API” brings a standardised interface. “Manage Through Global Metadata” provides a single view of data that abstracts from the underlying physical platform.

Features such as “ONTAP Automation” and the “Data Movers” will address step 3 (automation). Some of the workflow is already available (“Orchestrate and Automate with Fabric Flows”, for example). We’ve already highlighted that Cloud Sync previously provided some of this functionality. We can expect to see more coming directly into Fabric Orchestrator itself. Figure 3 shows services already available in NetApp Cloud as a reminder.

Optimisation

What about moving to step 4 and optimisation? At a recent Tech Field Day event, Nick Howell, Global Field CTO for Cloud Data Services discussed some of the future enhancements to Fabric Orchestrator. We can expect to see data optimisation implemented through the option to move data to the most cost-effective location automatically. Eventually, this may also mean moving applications, although the current model is for applications to drive data movement and not the other way around.

The video from the TFD event is embedded here. It’s longer than the average at around 1h08, however there’s some great additional content (including Nick without a real beard) such as screenshots of Fabric Orchestrator as offered today.

The Architect’s View

It’s impossible to offer a fully-featured data mobility solution from day one. As a SaaS offering, NetApp can change the interface, the APIs and evolve Fabric Orchestrator more rapidly than could be achieved running everything on-premises. NetApp’s solution is to develop their cloud story in a way that can be aligned to the market. The migration to a hybrid cloud is a multi-step process, and tools like Fabric Orchestrator provide steps in that journey.

We can expect to see much more detail behind Fabric Orchestrator in 2020. I’m interested to see how the tool develops, but perhaps more critically, how the interfaces and APIs will allow both the automation of the platform and the integration of 3rd party solutions. Features such as data protection will rely on cloud-native capabilities and today that means working with the solutions offered by AWS, Azure and Google.

After that, I hope to see cost optimisation information and eventually the ability to place portable workloads with data, rather than the other way around. This places data firmly in the hub of operations – a true “data centre”.

Copyright (c) 2007-2020 Brookend Limited. No reproduction without permission in part or whole. Post #28c3. This content was sponsored by NetApp and has been produced without any editorial restrictions.