In a previous post, I outlined a framework for moving to the hybrid cloud. With a 5-step process, IT organisations transform themselves from ad-hoc providers of technology to teams that pick the most optimal technology and providers that offer a genuine competitive advantage to the business. In this post, sponsored by NetApp, we look deeper into the challenges of standardisation and automation, examining how NetApp’s Kubernetes Service (NKS) helps deliver steps 2 and 3 of the hybrid cloud transformation process.

Background

Before we dive into the details of NKS, it’s worth doing some scene-setting. Many developers and IT organisations are focusing on a transition to micro-services and delivering applications with containers. Containerisation is a step further on from server virtualisation, where the aim was simply to consolidate workloads using virtual instances of previously physical servers.

With containers, applications are stripped back to only the executables and processes needed to run them. Multiple containers run on a single platform (e.g. Windows or Linux), theoretically allowing thousands of application components to run across a cluster of servers running container management solutions like Docker or Kubernetes.

Micro-services

Developing applications as micro-services provides for faster release cycles and additional benefits such as horizontal scaling (scale-out), as long as the application can be broken down into parallel executing components. The micro-services approach, compared to monolithic application development, enables individual components to be developed in parallel.

A excellent way to think of a micro-services architecture is in comparison to Object-Orientated Programming (OOP). In OOP, objects perform functions and have interfaces for accessing data (attributes and properties) or running procedures (methods). Exactly how the object is coded isn’t crucial to another part of the software consuming that object – as long as methods and properties behave as expected.

Micro-services offer the same level of abstraction as OOP, accessing services through APIs across a network. Micro-services have the benefit of being language independent. Theoretically, each micro-service could be written in the most efficient programming language available.

Container Orchestration

Getting past anything more than simple applications requires a framework and tools for container orchestration and management. Applications that run as a collection of micro-services have dependencies on each other and need managing for failure and scaling. Docker Swarm and Kubernetes are two examples of container management platforms that provide scalability and resiliency. Kubernetes has emerged as the de-facto standard for container orchestration and has become widely adopted by the industry.

Standardisation

Kubernetes offers a standard for running containerised applications across multiple platforms either in public or private cloud. Amazon Web Services offers EKS (Elastic Kubernetes Service) and Fargate. Microsoft offers the Azure Kubernetes Service. GCP has the Google Kubernetes Service. Across the minor cloud service providers, there is a range of similar offerings. Red Hat offers the CoreOS Tectonic framework. Canonical has a Kubernetes distribution. Heptio (now part of VMware) has a solution that is being integrated into Project Pacific. Pivotal, another VMware property, has PKS, the Pivotal Container Service.

We can continue to list Kubernetes distributions and service offerings. It is clear that there is a wide range of solutions across multiple public and private clouds that all have the same common denominator of Kubernetes. It’s also possible to build and deploy bespoke Kubernetes solutions simply by running VMs locally or with virtual instances in the cloud.

Kubernetes is Hard

The challenge for using any of these environments is the learning curve needed to operate them. Cloud-based solutions are built and managed slightly differently to each other. Creating a custom Kubernetes environment is hard. Developers need to know how to integrate both storage and networking effectively.

Skills are required to harden environments and build in best practices. At the same time, new Kubernetes versions are released every three months. Each component (e.g. apiserver, kubectl) can have separate release cycles. Just keeping on top of the latest releases is hard. Pushing these changes into development and then production is even harder.

Now scale up the challenge by attempting to manage Kubernetes across all of the service provider solutions. The effort is immense (and rightly questionable) and made greater with the expectation of running multiple Kubernetes clusters – possibly one for each major application.

NetApp Kubernetes Service (NKS)

NetApp acquired StackPointCloud in September 2018. The technology from the acquisition forms the basis of NKS or the NetApp Kubernetes Service. You can find more background in this post from early 2019.

NKS is available through the NetApp Cloud Central portal, delivered as SaaS, which includes a free 30-day trial. The current release of NKS provides the ability to build Kubernetes clusters using on-premises or cloud-based infrastructure. This is not a deployment of native Kubernetes services (like Fargate), but one built on virtual instances, storage and networking. NetApp offers support across AWS, Azure and GCP for the public cloud and NetApp HCI, VMware and FlexPod for private clouds.

Figures 1 through 7 show the configuration process using NKS. In these examples, we build out two environments, one based on AWS, the other on Azure. These solutions run in the customer’s account, based on credentials and permissions provided by the customer.

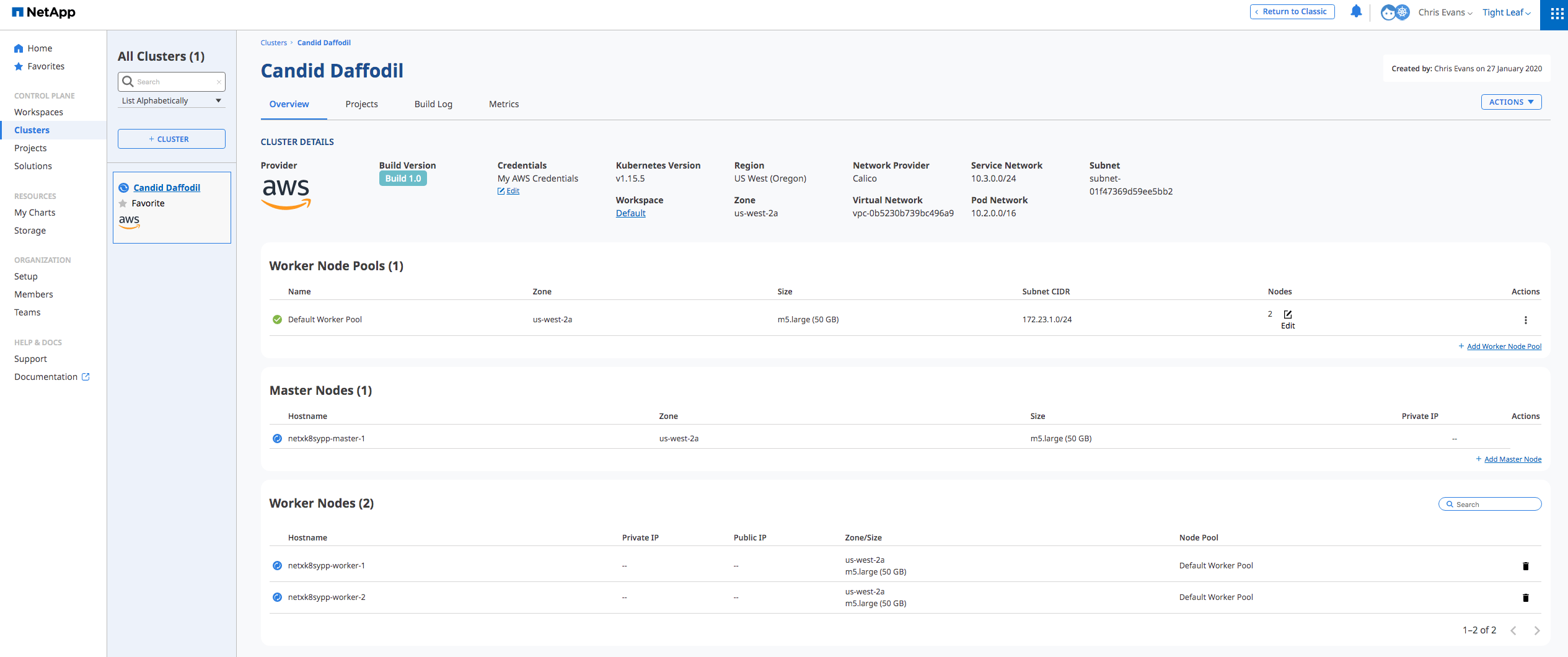

Figure 5 shows a three-node cluster, with one master and two workers, running on AWS. Figure 6 shows the equivalent service running in Azure. The NKS provisioning process handles the creation of load balancers, builds the virtual instances, then deploys and configures Kubernetes on each server.

From this point on, the user can then create projects and solutions on the cluster. NKS provides a kubectl config file to manage the cluster from the command line.

Hybrid Cloud Step 2 – Standardised

Step 2 of the hybrid cloud model describes the move towards standardisation of resources. IT organisations move from being focused on project-based delivery to one based on repeatable services. This transition is delivered in two ways:

- Externally to the business – the development of standard service offerings for the business to consume. Increasingly, these are more abstracted and talk about platforms, rather than components of infrastructure.

- Internally within IT – the IT departments standardise on the way in which services are delivered, allowing infrastructure to be easily replaced if necessary.

The first point delivers consistent services to the business, without developers needing to know the specifics of service implementation. The second point addresses the ability to choose the optimal technology that delivers to business requirements,

Looking at NKS, we can see that NetApp is providing a consistent service to the business. Developers can build Kubernetes clusters on-demand, with a standard pay-per-use service model. As new versions of Kubernetes are released, NetApp manages any configuration changes or differences in the deployment process.

Hybrid Cloud Step 3 – Automated

Step 3 of the hybrid cloud model addresses automation. With a standard service model in place, businesses can consume IT through portals, APIs and CLIs. IT teams get out of the path of delivery, moving to manage the delivery and monitoring of infrastructure, rather than the direct provisioning of services. Automation also provides the ability to start aligning on-premises IaaS (Infrastructure as a Service) offerings with those from public cloud providers.

NKS addresses these requirements directly. Developers can provision NKS and operate clusters through a GUI and CLI. Kubernetes deployment is consistent across cloud service providers and on-premises solutions.

Solutions provide the capability to deploy a range of well-known charts, including Istio networking, Prometheus and a mix of storage solutions that include Minio, OpenEBS and Redis. We’ll cover the specifics of building applications in another post.

Gaps

NKS is a new service from NetApp, and as such, there is room to build in additional features. Step 4 of the hybrid cloud model, for example, discusses optimisation and one area where NKS could provide further detail is to estimate the costs of deployment of an NKS cluster in each of the available platforms. If data locality is not a problem, then the end-user can choose the cheapest option.

NetApp also needs to address the requirements of storage assigned to each NKS cluster. Today, no storage options are offered for AWS-built clusters, but instead, simply use local EBS storage. Azure deployments appear to provide access to Azure NetApp Files, but this isn’t yet documented.

Another issue I’ve seen with the solution so far is the management of failure scenarios. Killing a worker node simply reports back a failure to the GUI. I expect in time, NetApp will add more automation to resolve common problems.

Managed Kubernetes

Another area of consideration is the integration with managed Kubernetes environments like Fargate and EKS. There’s a question to ask here as to whether having one managed service operating another managed service is a good idea. However, I would say that having a consistent set of interfaces is more critical than attempting to remove multiple layers.

Why NetApp?

I’m sure many readers are thinking, “why is NetApp getting into container orchestration?” It’s a good question and one for which there is no obvious answer. We do know that NetApp, as a company, has decided to embrace the public cloud rather than fight against it. You can see evidence of this with Azure NetApp Files and the other implementations of NetApp services with cloud service providers.

I think that NKS is an enabler to the Data Fabric concept. In the initial instantiation of the NetApp Data Fabric, 3rd party vendors can offer services into the Fabric. This capability could include data analytics, archiving, virus scanning or any other data-intensive process. NKS provides the means to directly run and manage these applications, wherever the data sits. In this sense, having an application orchestration solution makes complete sense.

The Architect’s View

NetApp is taking a bet that many enterprises prefer to invest in software and technologies, rather than train up individuals on software tools. This approach will be familiar with many IT organisations that prefer to develop high-level skills such as application development. Kubernetes, like OpenStack before it, is a rapidly changing platform that can quickly soak up any available resource with the enterprise. Why build internal skills around VM creation and automating Kubernetes installations when you can simply outsource it?

Putting in place a managed container framework is useful but serves no value until businesses can deploy applications to process their data. We’ll look at Fabric Orchestrator in another post and see how Keystone allows IT organisations to move to a more service-orientated model for on-premises infrastructure.

To learn more about NKS, head over to https://cloud.netapp.com/kubernetes-service or check out this video from Tech Field Day that covers NetApp Cloud Services.

Copyright (c) 2007-2020 Brookend Limited. No reproduction without permission in part or whole. Post #ffd3. This content was sponsored by NetApp and has been produced without any editorial restrictions.