There was a time when all we had in computing was system memory and external storage. Thankfully I’m not old enough to remember when peripherals meant just tape, but in general, life was pretty simple then. In the early 1990s, I did use a little solid-state disk, but that was super-expensive and reserved only for improving overall system performance.

Last year we saw Samsung release Z-NAND and Z-SSDs and Western Digital released a DRAM caching solution. The hierarchy of products that span (and blur) memory and storage is expanding. How are we going to use them?

- Mainframe – the Original Composable Infrastructure

- What is the Technology Behind Samsung Z-NAND?

- Western Digital Redefines DRAM Caching

Volatility and Performance

We’ve become used to the idea that super-fast memory will be volatile. External storage is persistent, but the latency chasm between system memory and external storage is huge. Typically, we expect a balance of the two; either you get super-low latency with volatility, or persistence with a compromise on response times.

The introduction of 3D-Xpoint technology (branded as Intel Optane and Micron QuantX) blurs these boundaries, offering byte-addressable persistent storage at low latency. Admittedly this isn’t as low as DRAM, but sufficiently better than solid-state disks to make a difference.

Z-NAND from Samsung blurs that gap again. Western Digital uses planar NAND in the Ultrastar DC ME200 to give “near DRAM” performance with their caching solution. We’ve seen other products look to bridge the DRAM/storage gap too. Diablo Technologies had two products – Memory1 and ULLtraDIMM that both expanded the memory footprint with cheap flash and ran DRAM as fast storage.

NVDIMM

These products fit into a range of solutions called variously Storage Class Memory or Persistent Memory. The NVDIMM format is particularly interesting as physically fits into DRAM slots (with all the associated timing and support challenges). Now the operating system needs to know which devices are persistent and which are not, which to clear on boot, which to leave alone.

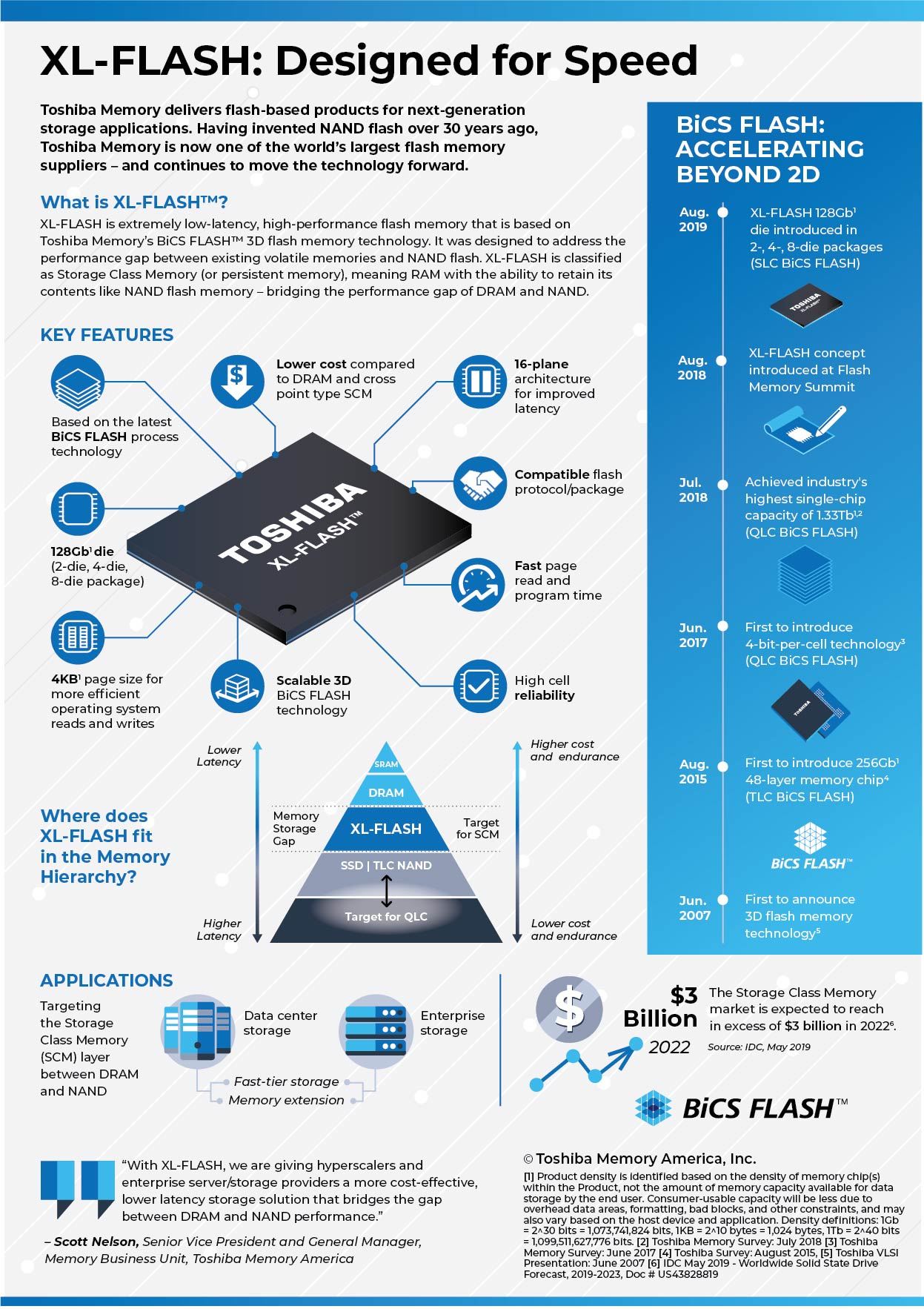

XL-FLASH

The latest entrant to the market is Toshiba with a technology called XL-FLASH. The product claims to be fast enough to fit into the SCM/PM category, with latency as low as 5 microseconds (read). No figures for write performance have been shared.

Although it’s still NAND flash, XL-FLASH has been architected to be faster than traditional flash products. As we increase cell bit count, the performance of flash decreases, especially for write I/O. XL-FLASH, (like we suspect Z-NAND) is using SLC, the original single-bit per cell variant of NAND, which is faster and has greater endurance than TLC and QLC products.

New Technology

Other solid-state technologies that have been in development for some time or are on the cusp of coming to market. Everspin has a technology called MRAM (magnetoresistive RAM) that uses two implementations capable of offering essentially unlimited endurance for persistent storage that is as fast as DRAM (although currently not as scalable).

A New Hierarchy

What does all this new technology give us? Rather than having hard boundaries, we now have a continuum of persistent and non-persistent storage/memory products, each offering slightly discrete and unique properties of performance, capacity, endurance – and cost.

With a range of products to choose from, there are now many ways to increase application and storage system performance.

HPE uses SCM (Intel Optane) in 3PAR and Primera platforms. This acts as an additional layer of metadata and cache storage, improving overall performance for a modest increase in cost.

NetApp uses SCM with MAX Data software to accelerate the performance of applications at the server layer. This is achieved by creating a local high-performance file system.

Infinidat has demonstrated the use of SCM in Infinibox as a performance accelerator.

IBM has used Everspin DRAM in SSDs to replace supercapacitors for power loss protection. This has increased the capacity of IBM SSDs and allowed the FlashSystem platform to move to standard 2.5” U.2 form factor.

In just a few examples, we can see this new technology being adopted to improve performance or ameliorate the abilities of existing solutions.

Systems

What happens next? From a storage systems design, solution vendors now have a massively increased range of products to choose from. VAST Data, for example, has developed their solution purely based on new technologies, namely Intel Optane and cheap QLC NAND.

Storage system vendors will undoubtedly start using the new products available, either to create new tiers of storage or to improve existing ones. In this respect, I don’t think we’re returning to the days of multi-tiered storage systems with hard drives. Instead, this will be about increasing performance and reducing latency by tactical use of new media technology.

I recently discussed some of these challenges with Erik Kaulberg from Infinidat. You can find that podcast embedded here.

SDS

What does this mean for the software-defined folks? First, it definitely means a greater choice of products to use within their platforms. However, it also poses a problem, because each of these devices has a different set of characteristics and I/O profiles. Testing every single product in enough detail to know how best to use it may be too difficult to achieve. Some vendors though, like WekaIO have actually improved performance over local storage when using new NVMe SSDs.

Alternative Solutions

It’s also worth mentioning here that there are now products blurring the distinction between storage and compute. Computational Storage uses the onboard SoC in SSD controllers to run code locally to data. Other vendors are using FPGAs to accelerate some storage workloads. We’ll discuss more on this in a future post.

The Architect’s View

Putting this all together, the choices for systems and platform (O/S and hypervisor) designers are set to increase. Whether any of the new solutions (like MRAM) will become widely adopted will be determined in part by the amount of any particular cost-saving (or performance improvement) than can be achieved. Optane, for example, hasn’t had the widespread adoption that could have been expected, partly because of cost and partly because the vendors aren’t making any money from the product.

We’re in interesting times, as application deployment diversifies to run across a range of platforms. End users, CIOs and businesses will continue to look for the most cost-optimised solutions available, so we can expect increased market diversification to occur.

Copyright (c) Brookend Ltd, 2007-2019. No reproduction without permission, in part or whole. Post #BBB8.