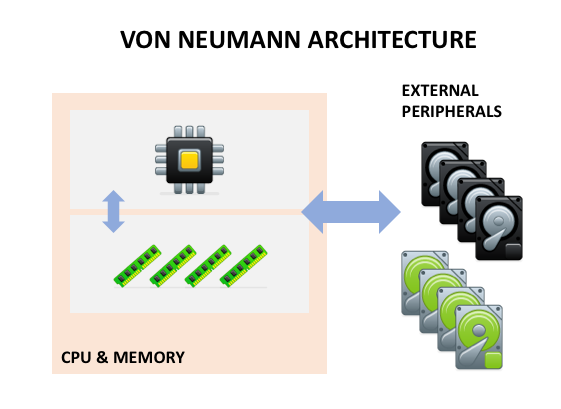

Classic Von Neumann architecture dictates that we closely couple our processing with volatile system memory, while treating other devices (like storage) as peripherals, literally on the periphery of central computing.

When we ran our information technology as one single monolithic piece of infrastructure, this architecture made sense. Over time, as we have standardised on the x86 architecture for 90% of application workloads, the physical constraints of the server result in waste and inefficiency. Composability seeks to address these problems and provide a software-driven process to combine and literally compose hardware together for a more dynamic approach to infrastructure deployment.

Building Blocks

Modern computing is built around the architecture developed by John Von Neumann in the 1940s. Modern systems design modified those designs to a degree; however, we still access programs and data from system memory (DRAM), reading and writing blocks of data from external storage.

Today we package infrastructure in a range of form factors that are generally based around 1U, 2U or 4U servers, one or more processors, physical slots for DRAM and expansion slots for peripherals. Storage Area Networks (SANs) provided some capability to pool storage resources (more on this later), but in general, processing power and memory are dispersed across multiple server chassis and not shareable.

Pools

Imagine an architecture where we could disaggregate and pool all of the physical resources available. Instead of using rigid building blocks, we could build a dynamic combination of processors, memory and storage to meet the needs of an application being deployed. Now, rather than having to find a server resource that matches application requirements, we simply configure it.

- Mainframe – the Original Composable Infrastructure

- The Ideal of Composable Infrastructure

- Composable IT – HPEs Next Big Thing

Software Composable Infrastructure (SCI), looks to achieve exactly this. Through software and APIs, IT departments can combine physical resources to build out infrastructure that looks like a physical server, using only the resources needed for that application and in the right proportions.

Why Use SCI?

It’s an obvious question to ask – why bother using SCI in the first place? Surely, we can achieve dynamic application deployment using existing tools like virtualisation and containers? There are two aspects to consider here:

- Static designs, dynamic applications – virtualisation brings the ability to dynamically create and configure virtual machines that can run across multiple servers in a cluster. Inevitably though, there’s always an imbalance between capacity and performance of CPU, memory and storage. This issue isn’t limited to just virtual server environments. SCI allows resources to be reconfigured dynamically to mitigate some of these challenges.

- On-Demand Infrastructure – public cloud has demonstrated how infrastructure can be made available on-demand, through APIs or a GUI. This is a good thing and sets a standard for how we should expect private cloud to work. However, even with this level of flexibility, CSPs (Cloud Service Providers) offer dozens of configurations that are processor, storage or memory biased. Wouldn’t it be easier simply to select the amount of resource required and have it built dynamically?

Recycle, Reuse, Reduce

SCI offers the ability to be much more efficient with existing hardware. The idea of a more flexible and dynamic infrastructure makes sense, but what if resources are needed for a short-term project or to meet peaks in demand? Composability provides a way to pick compute, memory and storage from a pool, use for a short period and return when finished. All dynamically and through software.

As application requirements change, it may make sense to retain physical servers and repurpose them for other uses, such as test/development environments. SCI makes that process easier and with efficient software, can be made directly available to developers without needing server admins to get involved.

So, SCI offers:

- Flexibility – more flexible configuration and combinatorial options than could be achieved with other technologies such as blades, converged infrastructure or hyper-converged infrastructure.

- Dynamism – the ability to configure resources on-demand and through APIs without needing manual intervention.

- Efficiency – the capability to optimise costs and reuse resources more efficiently.

These benefits are great, but what’s the reality of what can be achieved today?

It’s a Journey

If we look at modern server architectures, we see that compute and memory are very closely coupled. However, we’ve had physical resource partitioning in computing since the 1980s. Amdahl introduced MDF (Multiple Domain Facility) in 1982, while IBM introduced similar LPAR (logical partition) technology as PR/SM in the late 1980s. Sun Microsystems’ Sun Fire architecture in the 2000s offered Dynamic Reconfiguration to assign processors and memory to individual partitions.

Unfortunately, this isn’t the route the x86 architecture has taken. Intel developed x86 for embedded systems, then as a competitor for personal computer platforms. As Microsoft developed Windows NT and Linux became popular, x86 has developed as the de-facto standard for generalised computing.

This represents a problem for composability. Today, processor and memory resources are still aligned to a physical server, although storage has become significantly abstracted. We’ll discuss processor/memory issues in a moment, but for now, let’s look at storage.

SAN 2.0

Storage Area Networks introduced in the early 2000s provided abstraction and centralisation of storage resources. Fibre Channel (FC) and iSCSI allow direct mapping of hosts to storage using either physical FC networks or Ethernet. Both FC and iSCSI have strengths and weaknesses. Fibre Channel requires dedicated host bus adaptors (HBAs), whereas iSCSI works across standard Ethernet; FC is a much more reliable transport than Ethernet.

As the de-facto protocol for SANs, Fibre Channel has had a good run. However, the technology is seen as complex and cumbersome, with a need for very specific skills. Although this isn’t strictly true, it’s definitely the case that FC SAN configuration is very static and does require custom hardware.

The introduction of NVMe and specifically NVMe over Fabrics (NVMe-oF) represents an opportunity to take the benefits of a dedicated storage network and integrate that with standard compute and networking. NVMe-oF can run over physical Ethernet, Fibre Channel or TCP. In a composable model, a server just needs a physical Ethernet route to storage in order to consume NVMe logical or physical devices.

Composable 1.0

In the first instantiation, composable infrastructure means combining a range of physical server configurations with dynamically allocated storage. Vendors have focused on producing physical enclosures that permit combining multiple servers and storage that are connected to a common backplane – either Ethernet or PCI Express. A storage-intensive workload could (for example) be deployed on a new AMD EPYC processor with more PCI Express bandwidth.

While this is useful, it doesn’t address the ideal scenario of being able to combine multiple processors and memory as we were able to do 35 years ago.

Composable 2.0

The increasing performance of PCI Express is offering the ability to move a step closer to true composability. Today most server architectures are using PCI Express 3.0, which offers single-lane throughput of just under 1GB/s and multiples thereof. PCIe 4.0 products and support are starting to emerge and will double throughput. PCIe 5.0 will double this again.

With PCI Express as a backplane, composable solutions can now incorporate PCIe devices like GPUs and FPGAs. As relatively expensive resources, GPU and FPGA cards can now be added to servers and storage, ameliorating performance for short periods, for testing or as part of a new design.

Composable 3.0?

Where do we go after this? The performance and low latency of PCI Express offers the ability to connect other components into the processor complex. Infrastructure vendors are working on new interconnect technologies like Gen-Z, CCIX, OpenCAPI and CXL that will bring low latency persistent memory into the architecture. This could also include faster direct memory access between servers. At this point, we could get loose coupling between processors in a complex. Not entirely traditional NUMA but getting us close. Of course, none of this is going to be successful without the involvement of Intel, which owns the chipset architecture.

The Architect’s View®

The benefits of true software-composable infrastructure are tantalisingly close. Today we have limited capabilities, but we’re seeing new features being developed to extend the composability paradigm.

In future posts, we’ll look in detail at some of the features needed to implement SCI and the technologies and vendors that are adding more composability into existing infrastructure.

Post #6208. Copyright (c) 2019 Brookend Ltd. No reproduction in whole or part without permission.