VMware has announced Project Monterey, a hardware offload technology similar to AWS Nitro. The offering uses ESXi on ARM, running on SmartNICs from Mellanox and other partners. Are we entering a new era of offloaded computing, or does this solution simply create more complexity?

SmartNICs

SmartNIC technology has been available in the market for a few years. Last year on Storage Unpacked, I talked to Rob Davis from Mellanox about the use of SmartNICs to offload core functionality like networking and storage. Mellanox has since been acquired by NVIDIA, rebranding the BlueField technology as a DPU or data processing unit.

SmartNIC technology currently offers two main designs. BlueField and NICs from companies such as Pensando, sit in the data path and offload networking and storage functionality. For example, at the storage layer, a SmartNIC might present as a series of virtual NVMe drives on the PCIe bus, while the storage is actually located on a separate cluster of servers. We’ve seen this model with Nebulon, E8 Storage and Nitro from AWS. Networking functionality could present as virtual NICs or firewalls. In both cases, the data moves across a high-speed network, typically 50GbE/100GbE or InfiniBand.

The second model offloads functionality locally within a server. Examples of this technology include Pliops storage processor (offloading encryption), Xilinx Alveo and NGD Systems computational storage.

Use Cases

The obvious question is, why bother with this kind of offload technology? I discuss some of the benefits in this blog post from earlier in the year, where we compare modern x86 architecture to the IBM mainframe. Today, x86 processors offer dozens of cores, but we have to question whether ARM could find successful inroads into the data centre. You can read some additional background on this scenario in this blog post from the beginning of 2020.

Composable

There’s definitely a case to be made for offloading networking and storage functionality. If we combine this capability with composable infrastructure, we can see an architecture where discrete components are combined on-demand, while the networking and storage abstractions we gained in virtualisation are pushed to hardware.

- What is Software Composable Infrastructure?

- Mainframe – the Original Composable Infrastructure

- Liqid’s PCIe Fabric is the Key to Composable Infrastructure

Just think for a moment what this could mean. Now we can dynamically move GPUs and storage in and out of hardware clusters. We can dynamically map storage and networking with consistent MAC addresses and LUN IDs across the infrastructure, so as hardware fails, is shut down or needs to increase in capacity, we can achieve all of this external of the server itself and without lots of host-based reconfiguration.

Centralisation

The idea of entirely composable infrastructure offboard from the deployed operating system is one aspect highlighted by Pensando and Nebulon. The benefits cannot be understated. Imagine, for example, the work that goes into mapping and masking storage to a host. Today we use HBAs, LUN masking and fibre channel zones (or the equivalent for NVMe-oF and iSCSI). Significant configuration work is still needed at a host level. This can all be eliminated when the SmartNIC onboard the server presents disks as local NVMe drives – and can be managed remotely to achieve this process.

Project Monterey

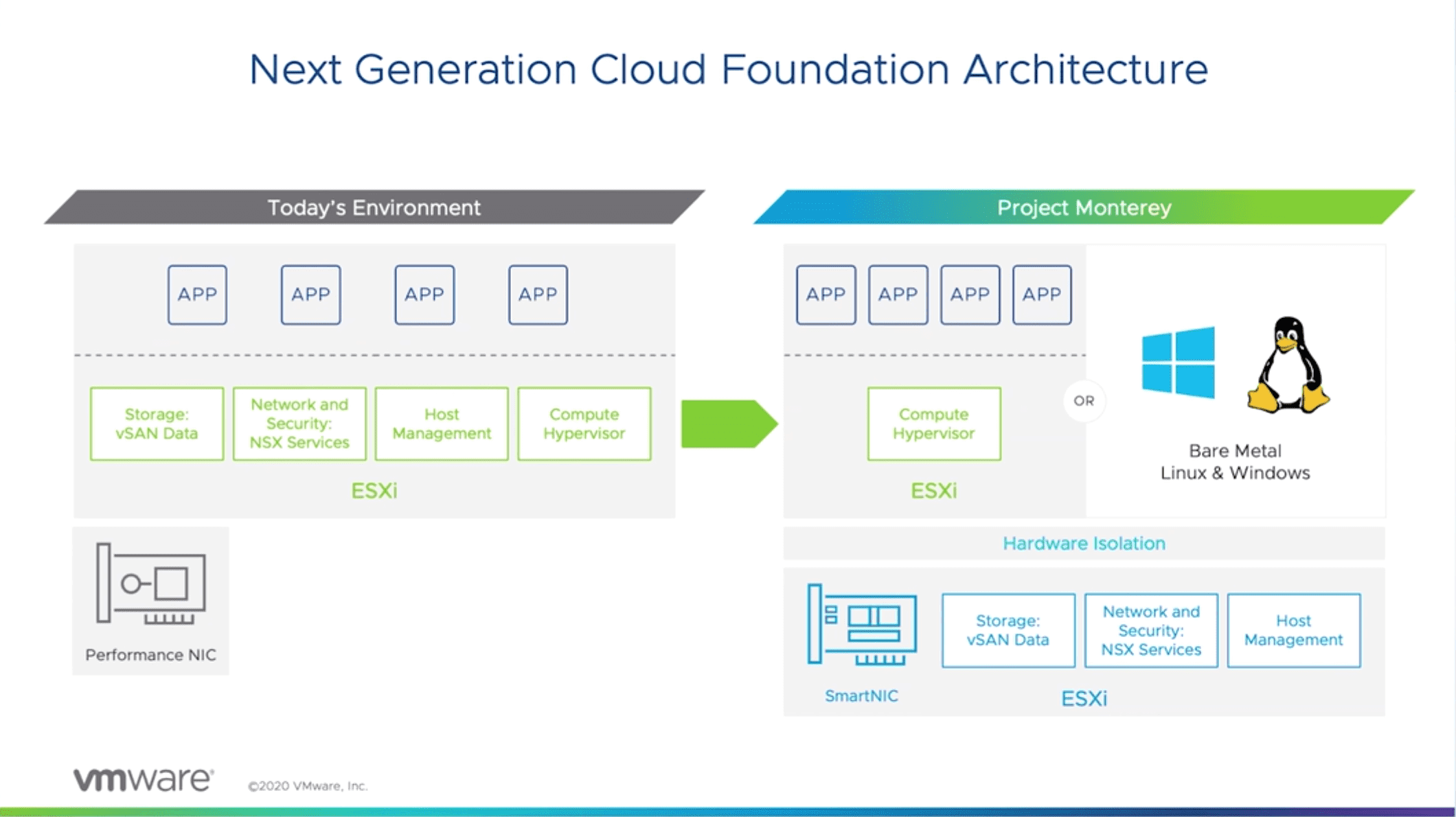

Let’s move on and look at what VMware is planning with Project Monterey. The company is working with partners such as NVIDIA and Pensando to develop offload technology for storage and networking. Instead of building these features into the hypervisor, BlueField SmartNICs take over the work and deliver local storage and networking.

The public details released by VMware have been light, but in a VMworld presentation, Kit Colbert demonstrated storage and networking offload on ESXi 7. The video highlighted a few interesting points.

- VMware intends to run ESXi on ARM onboard the SmartNIC and the company is making a virtue of this architectural design. In the presentation, a diskless ESXi node consumes storage over the network from a SmartNIC running vSAN and exposing NVMe LUNs. The demonstration shows similar functionality with network offload to the SmartNIC using NSX.

- VMware talks about bare metal deployments in a way that is clearly meant as a teaser to the viewer. Why would a virtualisation company want to provide embedded storage and networking capabilities to physical servers running native operating systems?

At first glance, the use of ESXi on ARM, incorporating vSAN looks overly complex. There’s no indication of how redundancy and resiliency are built into this model. For example, will storage functionality require a minimum of three servers and offload cards? How will resiliency be managed with SmartNIC failure (will cards be dual-homed)? How will licensing work? Will costs significantly increase to cater for the additional ESXi and vSAN licences? Similar questions arise on the networking side.

Bigger Picture

But perhaps there’s a different end game here. Custom hardware is an excellent lock-in solution that becomes hard to unpick. While the BlueField SmartNICs are off-the-shelf components, their integration into a solution like the one being demonstrated would result in a long-term commitment to the VMware architecture.

If the infrastructure/application strategy for customers moves towards a high degree of containerisation, then more bare-metal deployments may become the norm. Even without Tanzu, VMware could still be relevant in this market, presenting customised networking and storage to bare-metal hosts. It’s a strong strategy that competes with shared storage and solutions from companies developing NVMe-oF or their own embedded offerings.

Size Matters

The adoption of Project Monterey isn’t for everyone and in fact, is probably only for VMware’s largest customers that may be looking to build out more efficient compute farms based on open-source virtualisation or containers. While I don’t think the technology is aimed at hyperscalers, I do believe that VMware has in mind the largest customers with complex management issues.

Project Monterey potentially mitigates the challenges of managing vSAN and NSX at the cluster level, moving functionality to the data centre layer where scale can be managed more effectively. vSAN, as an example, moves from an SMB/SME offering to one that works across the entire data centre without the lock-in of a single cluster.

The Architect’s View

At this point in time, we only get to see what VMware wants us to know about the development of Project Monterey. The teaser about bare-metal could be the herald that pre-announces ESXi on ARM for more mainstream applications. We also shouldn’t forget where NVIDIA sits in this discussion. The company is hoping to complete on the acquisition of ARM and has already added Ampere GPUs to the BlueField architecture. We could eventually see an end-to-end computing solution purely based on NVIDIA IP with no Intel involved at all.

Project Monterey could be just the start of an entire transition away from x86, where AI GPUs are more closely integrated with ARM. Are we on the cusp of a real inflection point in the data centre where the dominance of x86 could be coming to an end?

Copyright (c) 2007-2020 – Post #0994 – Brookend Ltd, first published on https://www.architecting.it/blog, do not reproduce without permission.