The rate of growth in flash media is truly incredible. In ten years, we’ve gone from highly expensive SLC devices to a range of NAND flash media for all requirements. Flash manufacturers continue to push the boundaries, with 30TB and 100TB drives already on the market. With no end to this growth in sight, how can array vendors make sure that their solutions can still perform?

- Dude, Here’s Your 100TB Flash Drive!

- Samsung 30TB SSD – The New Normal

- Micron ushers in the era of QLC SSDs

How does DRAM and metadata management get handled on devices that have millions of sub-objects to track, while implementing data services that customers expect?

The Scale of the Problem

When flash was first introduced into the Symmetrix DMX platform in 2008, EMC placed limits on the number of drives per system and the physical location of those drives on the back-end storage bus. Flash was so much faster than disk and had the capability to overwhelm the system, so EMC had to be careful about how they were used.

As flash has been adopted in either existing or newly designed shared storage arrays, vendors have been under pressure to introduce ever greater capacity drives. Increased capacity reduces the $/GB calculation, a marker of pricing in the industry. However, simply plugging in bigger drives into existing all-flash solutions isn’t that simple, because flash, like disk before it is divided up and mapped at a much more granular level.

Metadata

Depending on the implementation, vendors sub-divide storage into blocks, anywhere from 512K upwards. Typically, values are between 4KB – 16KB. When features like de-duplication, compression and thin provisioning are implemented, each block represents a piece of unique data.

In simplest terms, the smaller the block size, the better the savings from data services, because more duplicate data can be eliminated with smaller blocks. In reality, this isn’t practical and there’s a trade-off between block size, the effort to track and identify blocks and the processing power to calculate hash keys from data and do tasks like compression.

Let’s just think though, for a moment, before we proceed, how much metadata is needed to track a 1TB flash drive with 4K blocks. A single 1TB drive can be divided into 250 million 4K blocks. A typical 8TB drive is around 2 billion pieces of metadata to track. Remember this is just media metadata and we have to track logical volume metadata too. When storage arrays start to contain multiple 8-30TB drives, we start to see the scale of the problem.

DRAM

With the speed of NAND access today, metadata needs to be kept in memory, otherwise we risk slowing down an expensive resource. This is more important than ever, as we move to NVMe SSDs and Storage Class Memory. Typically, in-memory means in the controller(s), each of which needs to scale with the same amount of DRAM. Most implementations will also use NVRAM as a journal of metadata updates, with periodic flushing to SSD or other persistent media.

So, can we see a correlation between the amount of DRAM per controller and the amount of storage supported by shared storage arrays?

Look at the following table, which shows data for SolidFire nodes since the company was founded (I think I have them all). The ratio of DRAM rises consistently with each hardware iteration. To use 7.68TB drives would theoretically need 1.5TB of DRAM per node and 15.3TB drives around 3TB of DRAM.

| SolidFire Model | Drive Capacity (GB) | DRAM (GB) | Ratio |

|---|---|---|---|

| SF3010 | 300 | 72 | 4.2 |

| SF6010 | 600 | 144 | 4.2 |

| SF9010 | 900 | 256 | 3.5 |

| SF2405 | 240 | 64 | 3.75 |

| SF4805 | 480 | 128 | 3.75 |

| SF9605 | 960 | 256 | 3.75 |

| SF19210 | 1920 | 384 | 5 |

| SF38410 | 3840 | 768 | 5 |

To be fair, scaling up the capacity per node isn’t the design goal of SolidFire, which is meant to be scale-out. So we may never see drives of 15+TB in this architecture unless the number of drives per node is decreased. This is exactly what we see in NetApp HCI designs, with half-width servers and 6 drives per node using SolidFire for storage.

XtremIO

Let’s look at another example. How about XtremIO?

The original XtremIO X-Bricks has 256GB of DRAM per controller, with support for up to 25 400GB drives. The details of the controllers haven’t been on specification sheets for some time, however, we know from a few presentations and other links that the latest X2-S and X2-R models have 384GB and 1TB of DRAM per controller respectively. These support 400GB and 1.92TB drives.

As part of the 2.4 -> 3.0 XIOS upgrade (the infamous destructive upgrade), the internal block size was changed from 4KB to 8KB. The first version of document h11752 (XtremIO architecture) mentions 4KB blocks, whereas subsequent versions of the document don’t mention block size at all. So, bearing in mind the block size change from 1.0 to today, the following table shows the DRAM/flash ratios we know about. Again, there’s a correlation between DRAM and storage. This is as we’d expect, with a platform where all of the metadata is in memory.

| XtremIO Model | Drive Capacity (GB) | DRAM (GB) | Ratio |

|---|---|---|---|

| X1 1.0 | 400 | 256 | 1.56 |

| X2-S | 400 | 384 | 1.04 |

| X2-R | 1920 | 1024 | 1.875 |

What does this mean for larger capacity drives? 3.84TB SSDs could be supported, although the number of processors per node would have to increase to meet the memory requirements. On these ratios, 7.68TB drives wouldn’t work without some serious server horsepower, somewhat countering the scale-out nature of the platform.

Constraints

In architectures that are based on standard server design (and where metadata is all in-memory), it’s easy to see how capacity scaling is limited by the amount of DRAM available. Increasing DRAM to the maximum allowed by the architecture means committing to more processors than perhaps are needed and to using a larger form-factor server.

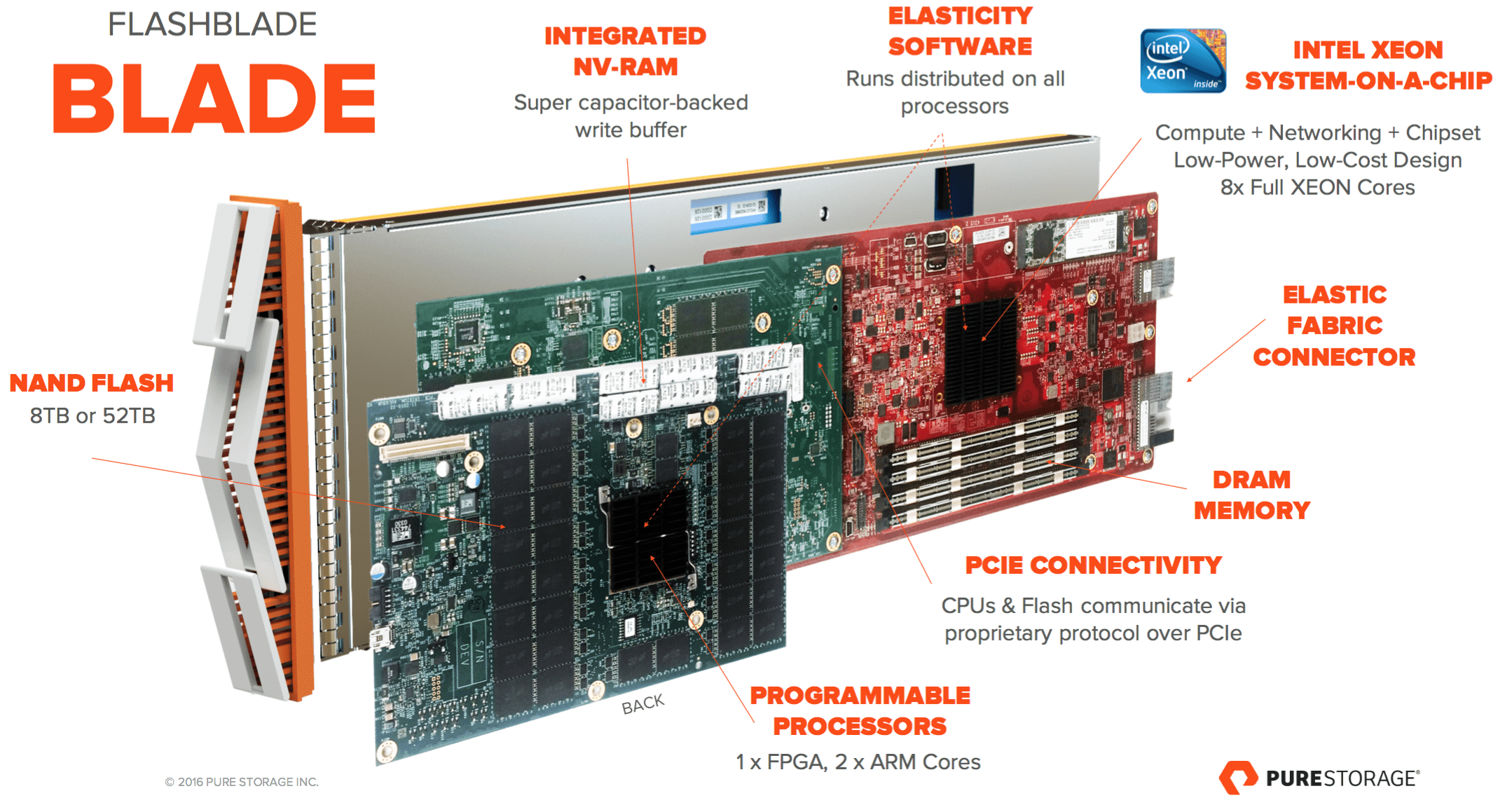

What are the alternatives? One scenario is not to store all the metadata in DRAM at the same time. This approach is taken by, for example, Pure Storage with FlashArray. Metadata is stored on SSD and mostly kept in memory, with a separation made on metadata for logical data and metadata for physical media. Actually, there’s a lot more to the architecture than just this, but you get the idea. Similarly, FlashBlade scales metadata and DRAM with each blade added.

Another different architectural approach is that taken by Vexata. The VX-100 platform uses a hardware Enterprise Storage Module with up to 4 SSDs and a MIPS processor. Metadata is distributed across the ESMs and stored in DRAM associated with each ESM processor and so scalable independently of the front-end management controllers. You can find more on the Vexata architecture in a recent white paper we wrote that covers the architecture in more detail. Alternatively, we have a 2-page briefing document that highlights key architectural features.

The Architect’s View

As we’ve said before, architecture matters. Riding the DRAM curve puts limitations on architecture and with QLC and large-capacity drives already here, building the next wave of all-flash systems needs to cater for the scalability we’re seeing from flash. It would be easy to think that we’ll never see 30TB+ drives in arrays, but we said that about terabyte-sized HDDs too. It’s going to be fun watching it play out in the next few years, as vendors yet again have to go through the (re)design process.

Comments are always welcome; please read our Comments Policy. If you have any related links of interest, please feel free to add them as a comment for consideration.

Copyright (c) 2007-2020 – Post #AA6E – Brookend Ltd, first published on https://www.architecting.it/blog, do not reproduce without permission. Disclaimer – Pure Storage, Vexata and NetApp have been or are customers of Brookend Ltd.