Over recent years we’ve covered the incredible increase in capacity experienced in hard drive and NAND flash technology. Today’s devices are quoted in terabyte units, with 100TB drives likely to be common in the not too distant future. Unfortunately, our ability to get data on and off these devices has remained relatively limited. New techniques are being developed that zone or segment drives, allowing them to be accessed much more effectively than before.

Update: Western Digital announced the Ultrastar DC ZN540 in November 2020. WDC is touting performance improvements and better QoS with the drive. Samsung announced their first ZNS drive in June 2021. The PM1731a will offer between 3-4x the lifespan of comparable non-ZNS drives.

Single-stream Performance

Back in 2013. I produced some data for a presentation that highlighted the I/O density reduction experienced in the hard drive market. The aim was to show how SSDs were destined to replace HDDs over time. The data derived from 15K Seagate Savvio drives, a category that has all but been replaced by solid-state media. At the time, these 300GB HDDs achieved approximately 176MB/s sustained throughput. Today the Seagate Barracuda Pro (7.2K RPM, 14TB) has increased to a sustained throughput of 280MB/s. This capability seems like a significant improvement until you realise that this is only 1/30th the I/O density of the 2013 Savvio drive.

- That 100TB Drive Is Closer Than You Think

- Dude, Where’s my 100TB Hard Drive?

- Dude, Here’s Your 100TB Flash Drive!

Remember that these performance numbers are for throughput and so represent at best, what can be achieved with a purely sequential workload. Random I/O performance has hardly improved at all in the ten years between the release of the Savvio and Barracuda drive models and, in this instance, would be worse for the Barracuda as the speed of response is partly based on rotational latency.

SMR

In the HDD market, vendors continue to look for new ways to improve areal density. One solution is SMR or shingled-magnetic recording. This process overlays tracks of data (like the shingles or tiles on a house roof) to improve density. The side-effect is that individual tracks cannot be re-written in place but have to be written as an entire area or zone. In many respects, this is analogous to the challenges of NAND flash media, where data can only be written in pages and erased in much larger blocks (more on this later).

Zones

So how are vendors looking to solve the challenges of using all the capacity in a drive? Two solutions are in play today, one focused on spinning media, the other on flash drives. Zoned drives, typically those using SMR recording technology, provide the capability to segment up the physical capacity of a drive into logical zones and zone domains. A single zone is a collection of LBAs (logical block addresses, or simply blocks) that are then grouped into zone domains.

Zones are much larger than a single LBA, for example, 256MB on the Western Digital Ultrastar DC HE620/650 models. A single drive could have multiple zones actively writing at any one time, representing numerous streams of data into a single device.

Going back to SMR for a moment, we can see that the technology presents issues with the process of writing data onto overlaid tracks. The proximity of each track introduces the risk of corruption of nearby data, so SMR tracks are only written in order. You can’t go back and re-write a track that’s in the middle of a series of shingled tracks. As a result, SMR drives write sequentially, only allowing data to be re-written at the zone level. This means that changing data in the middle of a zone requires a read-modify-write process – read the zone into cache, modify the LBA, write the entire zone back again.

Multiple Streams

As we discussed earlier, random I/O performance is the worst scenario for a hard drive. Random read is particularly bad, as the drive has no way to predict where reads will come from and so throughput reduces to a level aligned to the mechanical capability of the device. When a hard drive processes multiple I/O streams, some cache optimisation is possible, but the net effect is similar to the performance seen with random I/O, even when the source data is sequential.

SMR drives have an even greater challenge, as the effective write size is the zone size (typically 256MB).

It’s clear that zoning could provide benefits in managing multiple streams of data and writing them sequentially to separate zones. This process requires logic either at the host/application layer or within the drive itself. SMR drives have three data management options:

- Device Managed – the drive is essentially plug-and-play, with a traditional drive. The host has no awareness of the SMR format, and the drive manages the write optimisation. Performance, as a result, can be unpredictable.

- Host Managed – the host manages the process of writing to the drive, either within the O/S or application (more on this in a moment). Data has to be written sequentially; otherwise, I/O commands will fail.

- Host-Aware – a superset of the device and host managed. The drive is capable of managing random I/O but exposes data to the host to optimise the write process.

Zoned storage support was introduced into the Linux kernel from release 4.10 onwards. The dm-zoned device mapper exposes a standard block device while managing the translation between device I/O and the caching/reallocation process needed to write to SMR zones sequentially. Alternatively, applications can write directly to a zoned device and manage sequential I/O using a new set of specific SCSI commands. The application takes responsibility for translating random I/O into sequential writes. The assumption here is that the application understands the source data better than the cache on a drive and so can write multiple streams more effectively.

Zoned Name Spaces

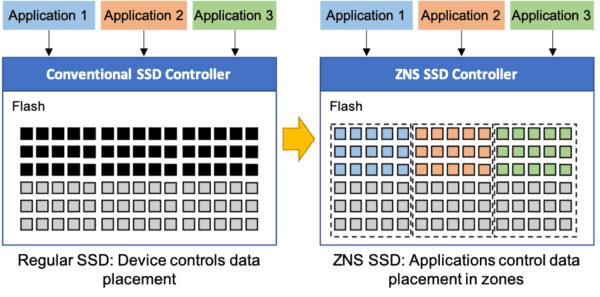

Zoned Name Spaces (ZNS) has been under development by NVM Express for some time. We briefly discussed the technology in a brace of Storage Unpacked podcast episodes, recorded with J Metz in September 2019. The ZNS standard divides a physical NVMe SSD into logical zones, each of which may be mapped to a separate application. Now, instead of implementing wear levelling and garbage collection across the entire device, each zone can be treated independently. This reduces write amplification, reduces over-provisioning and can make DRAM usage within the drive more effective.

To understand why this is the case, we need to look at the capabilities of modern SSDs. Today, drive capacity has reached 32TB, with the promise of 64TB just around the corner. We’ve seen some proof of concept devices and a 3.5” 100TB SSD on the market already.

Mixed Workloads

At this level of capacity, we can expect a collection of drives to provide a petabyte of capacity with ease. However, unless your requirement includes high-volume analytics, it’s unlikely a single application will need this level of capacity. The more likely scenario is that we see pools of storage supporting multiple workloads, as we had done in the past with shared storage and storage area networks.

In this type of configuration, some workloads will be read-intensive, some write-heavy and some mixed. With ZNS, each application (or application group) could be offered a unique zone of an SSD, providing more consistent performance for read-only workloads and managing garbage collection for more write-intensive data.

I would even speculate that we could see multi-variant SSDs, where some part of the SSD is configured as SLC/MLC and other areas as TLC/QLC. This design would allow write-intensive workloads to maintain better endurance (at lower density) on SLC/MLC portions, while other areas that need read capabilities are configured as TLC/QLC. This process already happens today, but I predict we’ll see more innovative uses of ZNS and multi-variant SSDs that use high-capacity devices more efficiently.

zonedstorage.io

This post provides a flavour of what to expect with high-capacity devices. Western Digital has developed a dedicated website at zonedstorage.io that contains much more detail and information on zones, SMR and ZNS. You can also watch the following Tech Field Day presentation from Storage Field Day 19, with Swapna Yasarapu.

For details on ZNS for NVMe SSDs, of course, listen to our podcasts (here & here). More information is available at the NVM Express website.

The Architect’s View

We have to find ways to manage high-capacity devices, especially HDDs, which are naturally limited by their mechanical nature. Zoned hard drives haven’t seen widespread adoption because they require application support. This issue is an area where storage systems manufacturers will need to step up and help to drive adoption. ZNS will need similar ingenuity from system manufacturers to gain the best from the technology. Software-defined storage developers face some interesting challenges, too, as these solutions become more mainstream.

Here’s a final thought. How are we going to improve device reliability, when so much capacity is invested in a single device? The cost/capacity/reliability calculation is going to be a challenge. Media vendors will have to address this question if we’re to put trust in single devices that could cost from hundreds to thousands of dollars each.

Post #a465. Copyright (c) 2007-2020 Brookend Ltd. No reproduction in whole or part without permission.