Amazon Web Services recently introduced a new class of S3 Object storage called S3 One-Zone Infrequent Access. This extends the options for S3 to four levels of availability and associated cost. What is the point of introducing another option and what could it be used for?

S3 Options

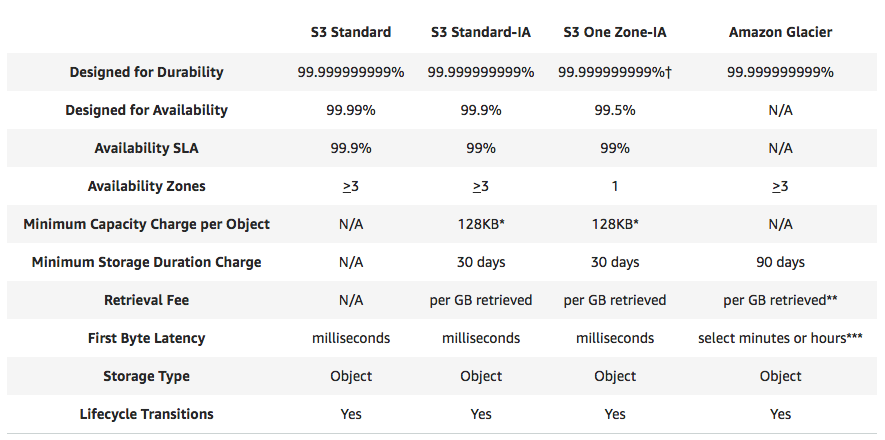

Until now there were three S3 classes. The initial Standard offering provided eleven 9’s of durability (99.999999999%) with 99.99% anticipated availability and 99.9% availability SLA. The level of availability is achieved by duplicating data across multiple (usually three or more) Availability Zones, which can be thought of as separate data centres in a similar geographical region. If a DC goes down, there are another two or more copies out there just in case.

- Should We Worry About The S3 Outage?

- Has S3 Become the De-Facto API Standard?

- Nine Critical Features for Object Stores

Over time AWS introduced Glacier, long-term cold storage for S3. This has the same level of durability (as do all S3 levels), but no availability guarantees and a minutes/hours level of time to first byte. Data is still protected across three or more availability zones. S3 Standard Infrequent Access offers 99.9% availability, with a 99% SLA and three availability zones.

S3 One Zone Infrequent Access

The new class – S3 One Zone-IA – sits one level below Standard-IA, offering 99.5% availability with a 99% availability SLA. Pricing is typically 20% lower than Standard-IA. I’ve been trying to see how this reduced cost option could benefit customers. AWS is claiming this new class of object storage could be used for secondary backups or data that could be re-created from elsewhere. Look at the following per-GB charges, based on US East (Ohio). Note that for each option, per operation and data transfer charges are the same.

- S3 Standard Storage – 2.3¢/GB/month

- S3 Standard Infrequent Access – 1.25¢/GB/month

- S3 One Zone Infrequent Access – 1¢/GB/month

- Glacier – 0.4¢/GB/month

First of all, could S3 in a single region make pricing cheap enough to spread data across multiple cloud providers? Possibly. The saving over Standard is big enough to put data into a second AWS region or another provider of similar pricing. But the problem here is what would occur in failure scenarios. If a single region lost all data, copying data again from another region would be expensive in terms of network traffic (typically around 2¢/GB). Copying data from AWS to a third-party provider would incur charges, as would the reverse process. The question is; how likely is AWS or any cloud provider to lose a DC and is that a risk worth taking?

Tiering

Another option is to tier data within AWS. For customers with existing Standard storage, cascading to Standard Infrequent Access then Glacier is quite attractive; the only compromise is in access time and some availability. Using One Zone Infrequent Access would save some cost, but that could be offset by pushing a little more data to Glacier and not compromising on redundancy across availability zones.

Existing Multi-Cloud

One area customers could definitely save is where existing data is already spread across multiple clouds. In this case, protection is higher than needed, so dropping to One Zone Infrequent Access makes sense.

The Architect’s View

The good thing from this additional option from AWS is in having more choice. Customers might have to be a bit more thoughtful with lifecycle rules, but that aside, having the ability to save 20% on some data charges is a positive move. However, my more cynical side looks at the pricing of S3 since inception and sees a halt to the regular price reductions. The last drop in price for S3 that I can see is November 2016, when the current pricing for Standard was reduced. Standard Infrequent Access hasn’t reduced in price since introduction in September 2015. So, is AWS reducing the price by reducing the quality of the service?

What does this scenario mean for on-premises object storage? With disk capacities increasing rapidly, on-premises costs for hardware are reducing at perhaps 30% per annum. The gap between cloud and on-premises is narrowing (if there was even one at all). Should Amazon, Google, Azure, Backblaze and others choose to hold off on price reductions, then the case for hybrid object storage is certainly easier to make. The real problem though is that the cost model is extremely complicated, with many moving parts. Cloud providers have definitely learned from the mobile and other utility industries where the ability to compare offerings is hard if not impossible. As a result, many customers will no doubt just stay where they are today.

Comments are always welcome; please read our Comments Policy. If you have any related links of interest, please feel free to add them as a comment for consideration.

Copyright (c) 2007-2020 – Post #644A – Chris M Evans, first published on https://www.architecting.it/blog, do not reproduce without permission.