IT organisations have been benchmarking storage since the first commercial disk drives were developed in the 1950s. Persistent storage has always been slower than system memory, and as a result, can be the bottleneck in many systems. As we move into greater use of containerisation, benchmarking is more important than ever to understand the interaction of increasingly closely-coupled storage, memory and compute.

Why Measure?

It’s a truism of the IT industry that persistent storage is much slower than volatile or persistent memory. Even with today’s NAND flash and Intel Optane products, the difference between the two equates to orders of magnitude. While a significant part of data processing can be retained in DRAM, at some point applications have to read more data into memory or commit updates to disk for persistence. As a result, for most applications, performance and (critically) user experience is driven by the speed of storage.

Benchmarking, or measuring the speed of one storage solution against another, provides a basis against which the efficiency of a storage solution can be judged.

Why is this important? In an ideal world, IT organisations would place all data on the fastest storage possible. However, financial constraints and the technical constraints of attaching persistent storage to an application mean compromise is inevitable. Benchmarking helps answer the following questions:

- Am I getting the best value for money from my storage infrastructure?

- Am I leaving unused resources (like IOPS) on the table?

- Is my storage operating efficiently?

- Am I using the right combination of CPU/RAM and storage in my solution?

Industry Benchmarks

Industry recognised and accepted benchmarks have been available for many years from organisations such as the Standard Performance Evaluation Corporation (SPEC) and the Storage Performance Council. Both of these organisations focus on testing media and storage systems using processes that try to reflect real-world applications. Other techniques include measuring and replaying I/O performance profiles to simulate a specific workload within a business.

Essential Features of Container-Attached Storage 2021 – eBook

Container-attached storage (CAS) has emerged as a new way to provide container-native storage to containerised and specifically, Kubernetes-based applications. CAS solutions integrate directly into a Kubernetes cluster and offer …

Container-attached storage (CAS) has emerged as a new way to provide container-native storage to containerised and specifically, Kubernetes-based applications. CAS solutions integrate directly into a Kubernetes cluster and offer …

While these solutions aren’t perfect, they provide prospective customers with some standardised way to compare one vendor product with another. Any testing methodology should be examined in detail and not merely taken at face value. Vendors want to game the system and the results in their favour, while benchmark developers want to ensure their testing process is as fair as possible.

Evolution

The development of new technology means benchmarks have to evolve. Some testing regimes have failed to keep up with techniques like data de-duplication or high degrees of RAM caching, for example. As we start to see persistent memory adopted in the enterprise, benchmarks will need to cater for scenarios where data isn’t stored on external appliances but manipulated through solutions such as MemVerge, adding more complication to determining what a benchmark test is.

Containerisation and Kubernetes have revived the concept of locally attached storage, driven in part by the abstraction of the public cloud. This represents another aspect of benchmark development.

Containers

Containerisation provides an interesting challenge for storage. As applications have become more abstracted from server hardware, the move to containers results in much more efficient and highly parallel use of resources. This process started with server virtualisation in the early 2000s. A combination of server/application sprawl and the slowdown of Moore’s Law resulted in significant consolidation savings that both exploited multi-core CPUs and moved away from “one application per server” deployments.

Where server virtualisation delivered dozens of applications per server, containerisation offers the capability for hundreds or thousands of micro-services per server, each expecting fair and immediate access to shared memory and storage resources.

Parallelism

The interesting aspect of this changing application landscape is the increased degree of parallelism at every part of the IT infrastructure. CPUs now run with dozens of cores and have to provide access to shared memory and storage to thousands of competing processes and threads. The NVMe protocol was developed partially as a response to the shortcomings of SCSI in this regard. The SAS protocol still operates with a single I/O queue, putting an immediate bottleneck in the way of efficient I/O.

Measurement Metrics

It’s clear that storage and storage benchmarking have to evolve to cater to the needs of containerised applications. What should be measured, and why is it important?

The classic triumvirate of storage metrics is latency, IOPS and throughput. Latency measures single I/O transaction time – how quickly a single read or write request is serviced. IOPS measures the number of individual transactions that can be processed over a period of time, and throughput measures the volume of data processed over time.

OLTP applications are typically latency-sensitive, while batch processing or analytics is likely to see more significant benefit from higher throughput levels. However, as we’ll discuss in a moment, the distinctions are not that clear cut.

Media Focus

Individual media devices can easily be benchmarked to measure latency, IOPS and throughput. Storage hardware vendors typically quote IOPS based on small block sizes, with throughput based on larger block size. This maximises the apparent capability of a device. In HDD media, latency has generally been similar across drives spinning at the same speed – the faster the drive rotates, the lower the latency. Solid-state media (NAND and Optane) have significantly reduced latency and delivered greater throughput and IOPS.

Systems Focus

Building complex systems from storage media results in storage appliances that exhibit very different characteristics from each other and from the media they use. Early all-flash systems, for example, were at risk of underutilising the performance of NAND flash due to bottlenecks elsewhere in the system.

Vendors developed new solutions or adapted existing ones to cater for the specific benefits of new media. This process is going through another transformation as persistent memory gains broader adoption in the enterprise.

Kubernetes

Kubernetes is gaining increased traction as a platform for application deployment. As discussed on a recent Storage Unpacked podcast, storage tends to come along late in the game as the implementation into new frameworks is complicated. Storage needs to retain state, a task much more complex than building out compute or networking.

Early storage for containers used local storage or proprietary plugins. The development of the Container Storage Interface has abstracted that connectivity and provides the ability to add service-based metrics to the process of mapping storage to applications.

Containers are designed to be efficient, and that means storage for containers (including Kubernetes) has to be able to meet that requirement too. Container-attached storage (CAS) solutions like Portworx or StorageOS have to be efficient and ensure the best use of local resources as the storage layer is integrated into Kubernetes and consumes CPU, memory and storage, just like the data plane in a hyper-converged platform. CAS solutions can’t leave IOPS on the table or consume more resources than the application they support.

The Architect’s View™

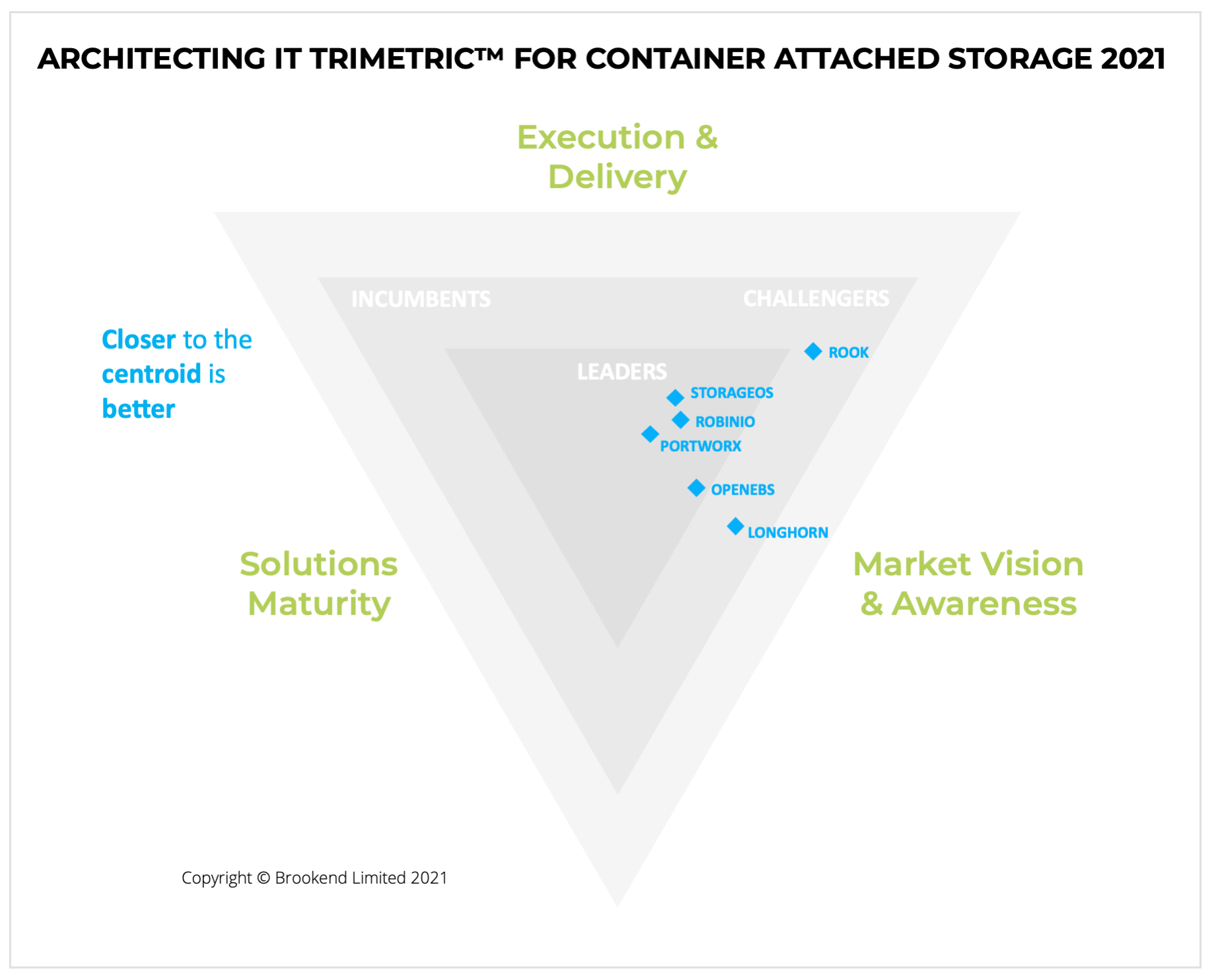

At Architecting IT, we’ve been working on evaluating the leading container-attached storage solutions for Kubernetes. The feature comparison results will publish this week as an eBook, while the results of the performance testing will publish next month.

Our testing has shown clear differences in performance between vendor solutions running on the same physical infrastructure. The results show that CAS solutions are not all the same, which has a direct impact on the performance of all applications that need persistent storage.

In the follow-up to this post, we will look at how performance analysis of CAS solutions was performed, in particular the process required to ensure, as traditional benchmarks have done in the past, that measurements are fair and equal. This will answer the “how” to the question of “why”.

Copyright (c) 2007-2021 – Post #2ea7 – Brookend Ltd, first published on https://www.architecting.it/blog, do not reproduce without permission.