HYCU Protégé enables application workloads in virtual machines to be migrated from on-premises environments into Google Cloud Platform. In this post we look at how the process works and the prerequisites that need to be in place to make the migration go smoothly.

Background

HYCU is a data protection platform from HYCU Inc. The solution runs on-premises to protect applications on Nutanix HCI systems and VMware vSphere. HYCU is also available on Microsoft Azure and Google Cloud Platform. You can read our quick review and tryout of HYCU for Azure here.

- HYCU Announces GA of HYCU for Azure

- Comtrade Software Becomes HYCU and releases HYCU 3.0

- Validating HYCU Deployment By The Numbers

Protégé

HYCU runs as one or more virtual machines on-premises and as a service in the public cloud. In each case, the administrator accesses the platform through a web GUI and also through a CLI or API on-premises. HYCU Protégé (announced in 2019 and GA in December) brings all these instances together to provide a single management view and offer the capability to migrate virtual instances to and from Google Cloud Platform. Protégé itself is installed as a separate on-premises virtual machine.

Testing

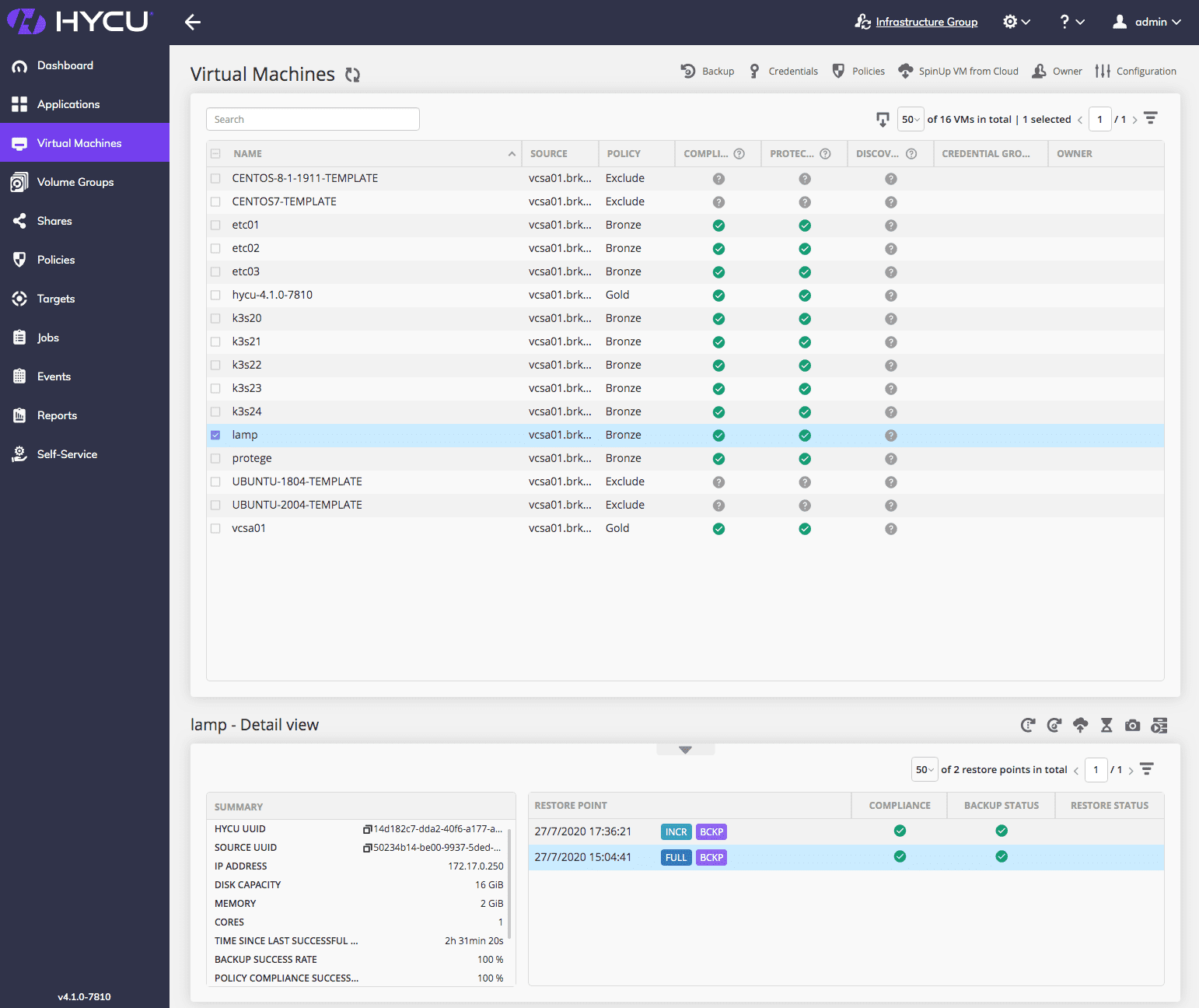

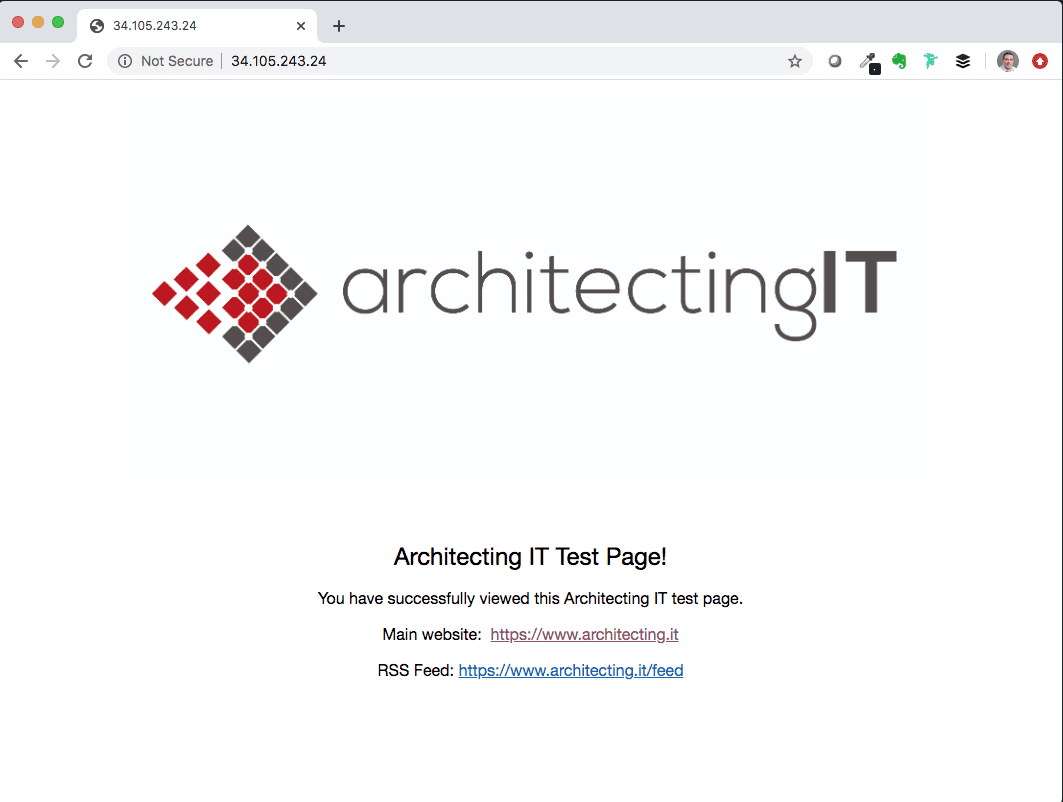

In this test, I’m going to migrate a virtual instance running in my on-premises test lab into GCP. The setup is shown in Image 1 and pretty simple. HYCU and Protégé are running as on-premises VMs, with an additional test application “lamp“. This is a simple CentOS 7 VM running Apache with a custom web page to display the Architecting IT logo. Backup data is written to a local NAS share in the lab (currently a FreeNAS VM).

First I’ve run a backup of the VM, added the Apache components and manually kicked off another incremental backup. Image 2 shows the result of the backup, which is compliant to my Bronze policy.

Cloud Upload

For any backup/restore point, I can push that VM image to GCP. Image 3 shows the option highlighted with a red box. Image 4 shows the dialogue box which is displayed when the option is selected. This provides the option to select the target cloud account (I could have more than one), project, region and zone. However, as shown here, the “cloud readiness” check hasn’t been successfully performed out our VM.

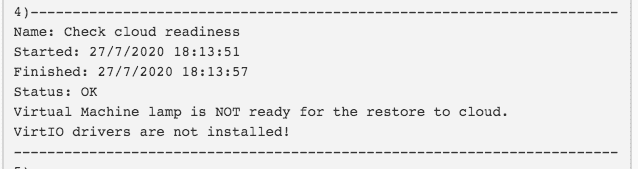

HYCU performs some basic tests on all VMs at each backup, validating the VM image as suitable for running on GCP. This includes having a dynamic IP address, having external access via ssh and virtio drivers installed.

Para-virtualisation

GCP runs virtual instances under KVM and so needs para-virtualised drivers to support pseudo-physical devices such as HDDs and network cards. It isn’t in the scope of this post to cover how virtualisation works, but taking a VM image running on vSphere into GCP needs virtio drives to be available to the kernel at boot time. Device drivers can be installed directly into the kernel or loaded dynamically after boot, however for GCP imported images, para-virtualised PCI, SCSI and networking support have to be in the kernel initramfs and not loaded dynamically (see this GCP page and image 5 for details).

Cloud Readiness

HYCU performs a cloud readiness check as part of every backup. This process requires credentials to be assigned per VM, which can be seen in Image 6. “LAMP ACCESS” credentials have been assigned and successfully validated against the VM. Images 7 & 8 show the results of the readiness check before and after the credentials have been added. Our lamp VM doesn’t have the drivers available to the kernel.

DRACUT

On Linux this problem is easy to solve. The virtio drivers have been available to the kernel since version 2.6.32, but we need to make them available at boot time. The dracut utility is used to add modules to initramfs, which used as an interim file system at boot time to bring in additional drivers and the basic file system for a new installation.

dracut --add-drivers "virtio_pci virtio_blk virtio_scsi virtio_net" -f -v /boot/initramfs-`uname -r`.img `uname -r`

Once the mods are applied, we can reboot and reattempt the backup. Now we can see (image 9) the cloud readiness checks have completed successfully and it’s time to push the VM to GCP. Running through the SpinUp process again, this time the VM image is uploaded through a temporary VM instance instantiated in GCP. I assume that HYCU needs some local proxy to receive and write the data to local disk, after which a new VM image is created and booted off the new disk.

Image 10 shows both the new lamp image and the temporary proxy used to upload the data. One final change is needed to open the VM to http(s) traffic (image11) and we can then browse to the homepage via the GCP assigned IP (Image12). One final step is to enable the uploaded lamp image to the local HYCU controller in GCP. The status of both backup environments is now visible in Protégé (image 13).

Cloud Bursting

This example is relatively simple and could be used to practically migrate a production VM into a public cloud. Alternatively, the process offers a solution to copy production data into the cloud for testing or other uses (subject to data obfuscation and other changes). More complex applications need more thought, to validate the latency between VMs and environments, and to understand networking and security implications. Obviously, there’s some pre-work needed to ensure that VMs will boot with the correct drivers and with access keys. That work can be built into image masters.

The Architect’s View

This migration process example was easy to perform and could be a good way to either migrate VMs into or out of GCP. I would like to see more data in Protégé to provide continuation of the history of a protected VM, in the scenario the VM is moved rather than copied. Currently the moved VM appears as a new entity, so some local naming convention would be needed to maintain application tracking. I’ve always thought cloud bursting was a bit of a myth, however HYCU now makes this process totally practical.

Copyright (c) 2007-2020 – Post #27F7 – Brookend Ltd, first published on https://www.architecting.it/blog, do not reproduce without permission. This post was sponsored by HYCU Inc.